AXRP - the AI X-risk Research Podcast

AXRP (pronounced axe-urp) is the AI X-risk Research Podcast where I, Daniel Filan, have conversations with researchers about their papers. We discuss the paper, and hopefully get a sense of why it's been written and how it might reduce the risk of AI causing an existential catastrophe: that is, permanently and drastically curtailing humanity's future potential. You can visit the website and read transcripts at axrp.net.

- Update frequency

- every 24 days

- Average duration

- 96 minutes

- Episodes

- 59

- Years Active

- 2020 - 2025

46 - Tom Davidson on AI-enabled Coups

Could AI enable a small group to gain power over a large country, and lock in their power permanently? Often, people worried about catastrophic risks from AI have been concerned with misalignment ris…

45 - Samuel Albanie on DeepMind's AGI Safety Approach

In this episode, I chat with Samuel Albanie about the Google DeepMind paper he co-authored called "An Approach to Technical AGI Safety and Security". It covers the assumptions made by the approach, a…

44 - Peter Salib on AI Rights for Human Safety

In this episode, I talk with Peter Salib about his paper "AI Rights for Human Safety", arguing that giving AIs the right to contract, hold property, and sue people will reduce the risk of their tryin…

43 - David Lindner on Myopic Optimization with Non-myopic Approval

In this episode, I talk with David Lindner about Myopic Optimization with Non-myopic Approval, or MONA, which attempts to address (multi-step) reward hacking by myopically optimizing actions against …

42 - Owain Evans on LLM Psychology

Earlier this year, the paper "Emergent Misalignment" made the rounds on AI x-risk social media for seemingly showing LLMs generalizing from 'misaligned' training data of insecure code to acting comic…

41 - Lee Sharkey on Attribution-based Parameter Decomposition

What's the next step forward in interpretability? In this episode, I chat with Lee Sharkey about his proposal for detecting computational mechanisms within neural networks: Attribution-based Paramete…

40 - Jason Gross on Compact Proofs and Interpretability

How do we figure out whether interpretability is doing its job? One way is to see if it helps us prove things about models that we care about knowing. In this episode, I speak with Jason Gross about …

38.8 - David Duvenaud on Sabotage Evaluations and the Post-AGI Future

In this episode, I chat with David Duvenaud about two topics he's been thinking about: firstly, a paper he wrote about evaluating whether or not frontier models can sabotage human decision-making or …

38.7 - Anthony Aguirre on the Future of Life Institute

The Future of Life Institute is one of the oldest and most prominant organizations in the AI existential safety space, working on such topics as the AI pause open letter and how the EU AI Act can be …

38.6 - Joel Lehman on Positive Visions of AI

Typically this podcast talks about how to avert destruction from AI. But what would it take to ensure AI promotes human flourishing as well as it can? Is alignment to individuals enough, and if not, …

38.5 - Adrià Garriga-Alonso on Detecting AI Scheming

Suppose we're worried about AIs engaging in long-term plans that they don't tell us about. If we were to peek inside their brains, what should we look for to check whether this was happening? In this…

38.4 - Shakeel Hashim on AI Journalism

AI researchers often complain about the poor coverage of their work in the news media. But why is this happening, and how can it be fixed? In this episode, I speak with Shakeel Hashim about the resou…

38.3 - Erik Jenner on Learned Look-Ahead

Lots of people in the AI safety space worry about models being able to make deliberate, multi-step plans. But can we already see this in existing neural nets? In this episode, I talk with Erik Jenner…

39 - Evan Hubinger on Model Organisms of Misalignment

The 'model organisms of misalignment' line of research creates AI models that exhibit various types of misalignment, and studies them to try to understand how the misalignment occurs and whether it c…

38.2 - Jesse Hoogland on Singular Learning Theory

You may have heard of singular learning theory, and its "local learning coefficient", or LLC - but have you heard of the refined LLC? In this episode, I chat with Jesse Hoogland about his work on SLT…

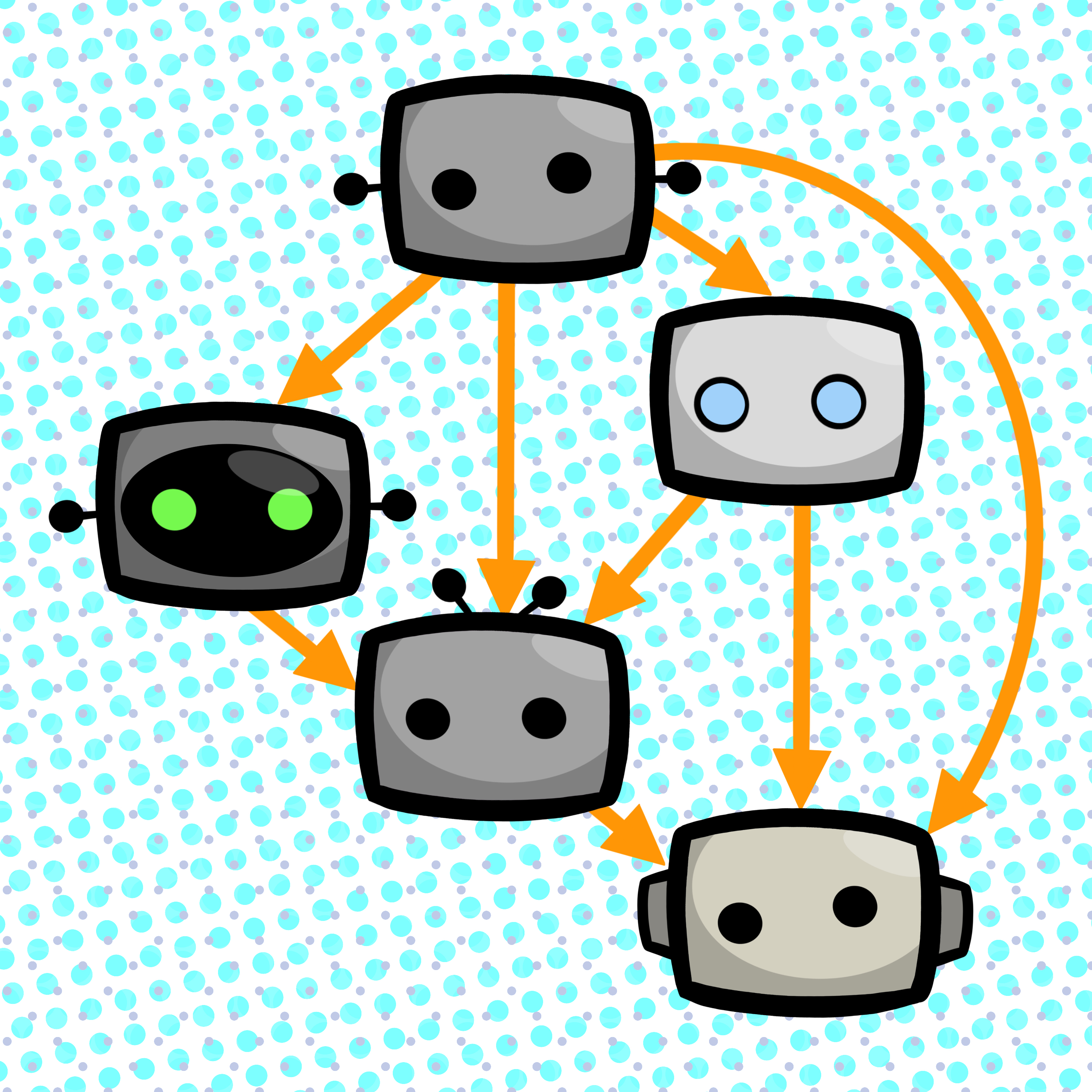

38.1 - Alan Chan on Agent Infrastructure

Road lines, street lights, and licence plates are examples of infrastructure used to ensure that roads operate smoothly. In this episode, Alan Chan talks about using similar interventions to help avo…

38.0 - Zhijing Jin on LLMs, Causality, and Multi-Agent Systems

Do language models understand the causal structure of the world, or do they merely note correlations? And what happens when you build a big AI society out of them? In this brief episode, recorded at …

37 - Jaime Sevilla on AI Forecasting

Epoch AI is the premier organization that tracks the trajectory of AI - how much compute is used, the role of algorithmic improvements, the growth in data used, and when the above trends might hit an…

36 - Adam Shai and Paul Riechers on Computational Mechanics

Sometimes, people talk about transformers as having "world models" as a result of being trained to predict text data on the internet. But what does this even mean? In this episode, I talk with Adam S…

New Patreon tiers + MATS applications

Patreon: https://www.patreon.com/axrpodcast

MATS: https://www.matsprogram.org

Note: I'm employed by MATS, but they're not paying me to make this video.