Pro-Cap: Leveraging a Frozen Vision-Language Model for Hateful Meme Detection

- Author

- HackerNoon

- Published

- Sat 27 Apr 2024

- Episode Link

- https://share.transistor.fm/s/bfa7e301

This story was originally published on HackerNoon at: https://hackernoon.com/pro-cap-leveraging-a-frozen-vision-language-model-for-hateful-meme-detection.

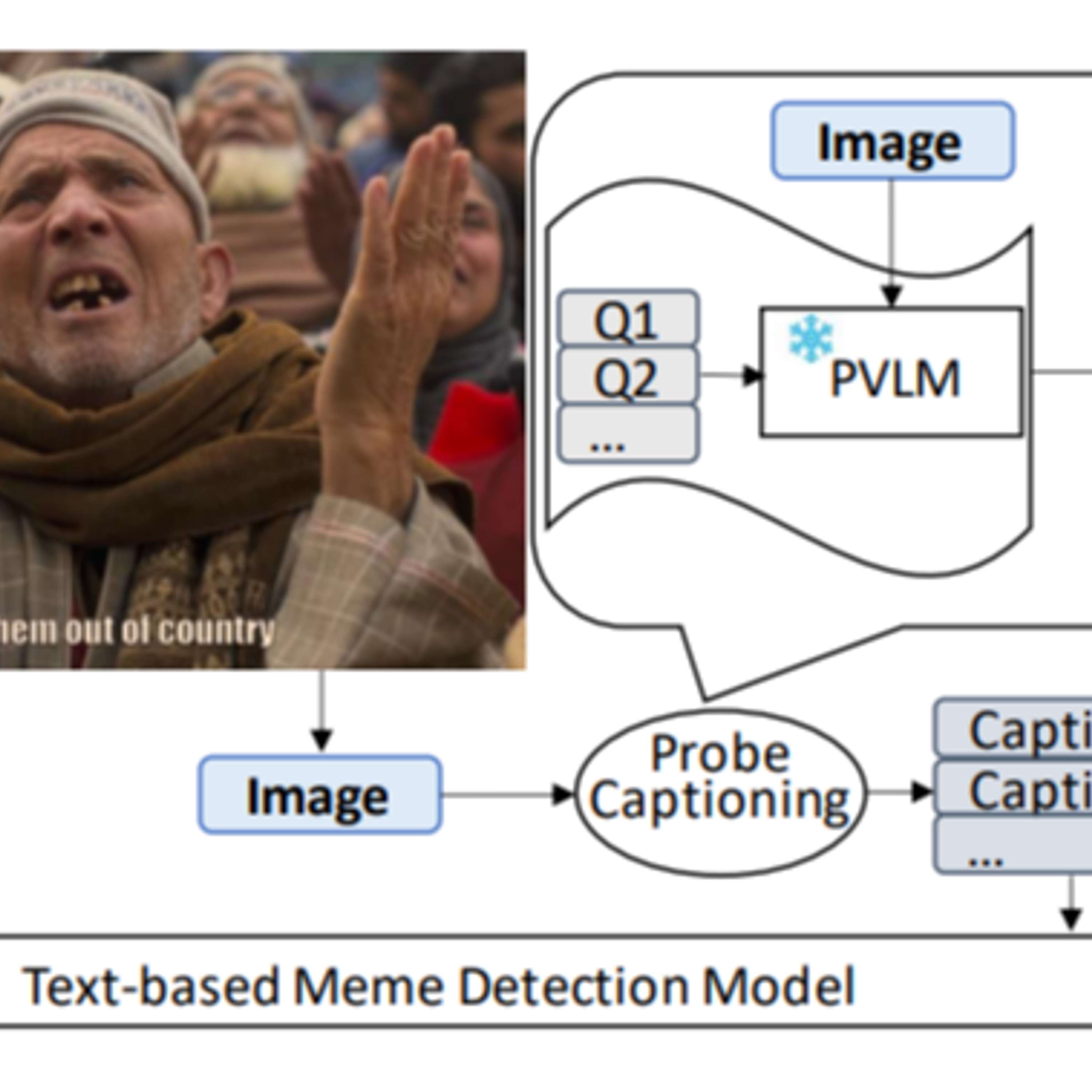

Learn about Pro-Cap, a new method that enhances hateful meme detection by leveraging frozen Vision-Language Models (PVLMs) in a zero-shot learning approach.

Check more stories related to tech-stories at: https://hackernoon.com/c/tech-stories.

You can also check exclusive content about #frozen-vision-language-models, #zero-shot-learning, #multimodal-analysis, #hateful-meme-detection, #probing-based-captioning, #computational-efficiency, #fine-tuning-models, #vision-language-models, #hackernoon-es, #hackernoon-hi, #hackernoon-zh, #hackernoon-fr, #hackernoon-bn, #hackernoon-ru, #hackernoon-vi, #hackernoon-pt, #hackernoon-ja, #hackernoon-de, #hackernoon-ko, #hackernoon-tr, and more.

This story was written by: @memeology. Learn more about this writer by checking @memeology's about page,

and for more stories, please visit hackernoon.com.

Pro-Cap introduces a novel approach to hateful meme detection by utilizing frozen Vision-Language Models (PVLMs) through probing-based captioning, enhancing computational efficiency and caption quality for accurate detection of hateful content in memes.