Setting Up ALM for Power Platform with GitHub Actions

- Author

- Mirko Peters - M365 Specialist

- Published

- Wed 06 Aug 2025

- Episode Link

- https://m365.show/p/setting-up-alm-for-power-platform

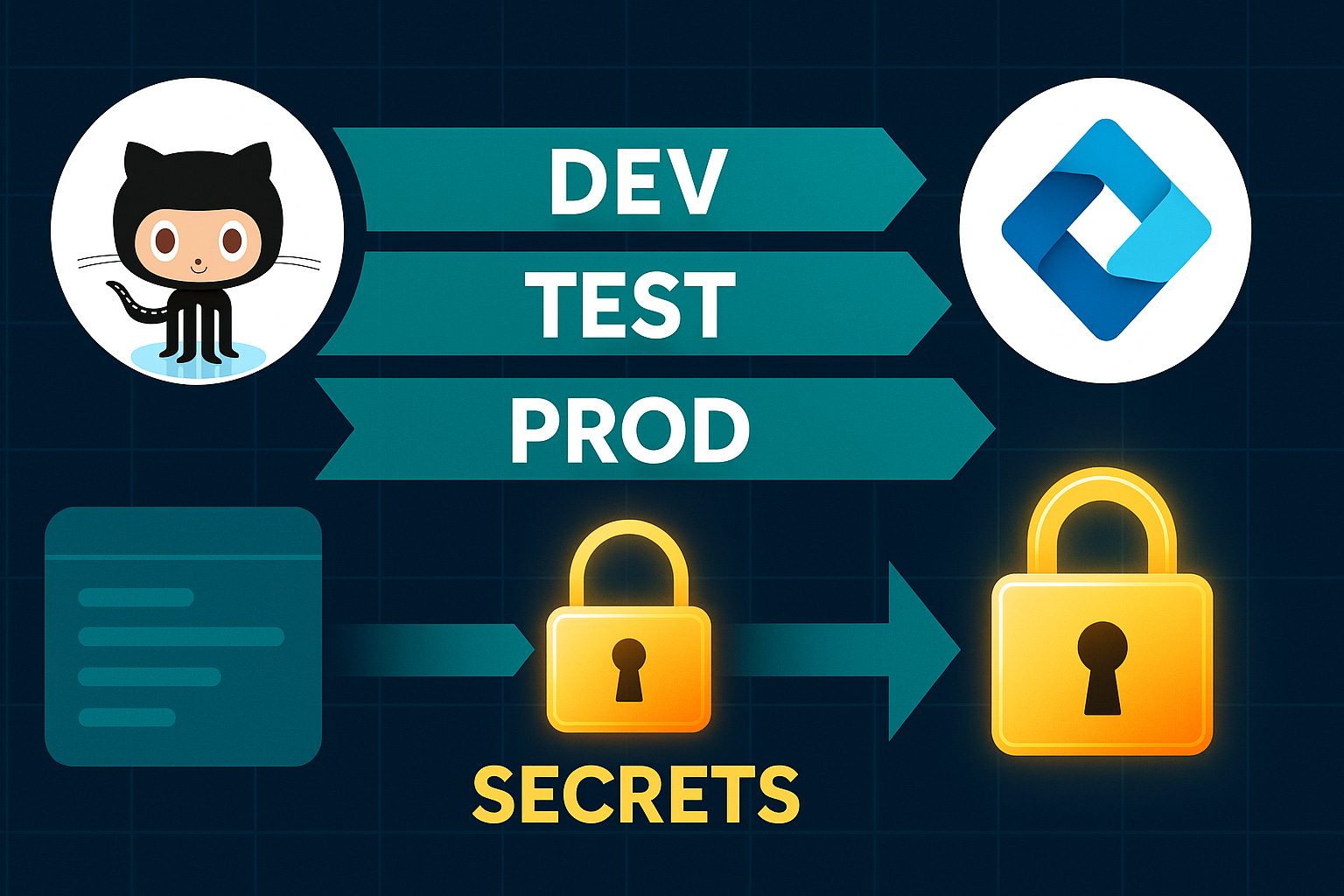

Ever feel like your Power Platform deployments are a black box? You ship an update, hold your breath, and just hope it works across dev, test, and prod. What if you could actually control—and see—every stage of your ALM process using GitHub Actions, with no more guessing or manual patchwork?Let’s pull back the curtain on how each component—source control, automation, and secure variables—really connects. This isn’t just another walkthrough. This is the ‘why’ most guides leave out, so your next deployment doesn’t leave you guessing.

Why Power Platform ALM Feels Like a Maze

If you've ever tried deploying a Power App and felt that creeping uncertainty—like something important must’ve slipped through the cracks—you’re not alone. On paper, Power Platform promises easy app development, but behind that friendly façade, ALM is anything but straightforward. A web app deploys from source control, builds in a pipeline, and lands in production with predictable behavior. Power Platform, on the other hand, hides half the logic inside drag-and-drop UIs, connector screens, and formulas you can't see in any Git repo. So even if you follow every step from those high-level admin guides, you still run into odd, hard-to-diagnose failures once business-critical apps leave development.Let’s look at the ALM guides floating around—most of them will walk you through exporting a “solution” zip and importing it somewhere else. Simple enough, right? But the “how” is just one layer. What they skip is the “why”—why does Power Platform deployment break in ways that regular code never does? That’s what causes endless confusion. The root of the problem is that logic, connections, and config in Power Apps aren’t stored like classic code. You’re not just cloning a repo and watching unit tests. So, when you try to move your app, all those hidden dependencies—connections to Outlook, SharePoint, custom APIs—don’t always travel neatly inside that zip file.Here’s the scenario: you get a shiny new app working in the dev environment. You export a solution, import to test, and suddenly half the flows stop working. It’s rarely just a file problem. Instead, connections are pointing to the wrong place, permissions don’t line up, and data policies block connections that seemed fine a few minutes ago. Now imagine doing this across three environments—dev, test, and prod—with each one using different connections, data protection policies, approvers, and admin guards. You can’t copy-paste your way out of it.The research backs up what admins and makers see every week: The majority of failed Power Platform deployments come from missing connector references or mismanaged environment variables. Missing a connector reference just means the flow can’t find its Outlook or Dataverse connection, so the minute you try to run your app or flow in another environment, you get runtime errors. And because environment variables work differently in Power Platform compared to other Microsoft 365 products, they trip up even experienced developers. Variables are supposed to handle endpoints, keys, or tenant-specific configs. But if someone forgets to update them after exporting a solution, even a harmless-looking change can break a live app.A lot of us fall into the same trap at first. You might think exporting a solution zip bundles everything needed, but it actually skips over dynamic connector references. For example, say you have an app that uses an HTTP connector for a third-party service in dev—when you import into test or prod, that exact connector instance won’t exist. The connector reference inside your app points to a missing object, and flows inside the solution quietly break until a user or admin manually recreates and remaps connections. If you have more than one maker or admin, it’s even easier to lose track of which reference goes where, and nobody wants that “it worked in dev” postmortem.Another wrinkle: service principals. Most folks start out using user accounts to export and import solutions, because it’s faster to get started. But when you try to automate with GitHub Actions or any CI system, using a person’s credentials quickly dead-ends. You’ll hit permission walls—sometimes without clear error messages—or even trigger compliance audits, because you’re running critical deployments under a personal account instead of something meant for automation. Service principals—essentially app identities created in Azure AD—handle all the permissions, logging, and auditing your pipeline needs. Without them, your automation chain turns into a patchwork of person-to-person handoffs, and you never know who changed what.Source control turns into a wildcard, too. With regular code, you have commit histories, PRs, and blame tools. Power Platform’s solution files only represent snapshots, which means you’re missing granular change tracking. Logic tucked away in a canvas app’s screen formulas or in lightly documented flows isn’t visible until you export again. Someone tweaks a flow setting in the UI, and good luck finding out why downstream environments suddenly behave differently.The end result? Managing ALM for Power Platform feels like solving a puzzle with half the pieces missing—or at least, hidden under the box. Invisible dependencies, environment-specific connectors, and variables that you can’t see in code all muddle the usual developer workflow. And that’s before you deal with the fact that production environments almost always have stricter permissions, different data policies, and audit requirements that aren’t obvious in dev or test.But here’s the upside: this complexity isn’t random. There’s a practical reason for why Power Platform handles source, variables, and connectors in such a unique way. It tries to let business users build powerful apps without worrying about code. The side effect is you, as the person setting up automation, need to track what’s hiding under the hood. If you know where those invisible wires run, you can fix or avoid most of the footguns that derail Power Platform ALM.Now, if all this sounds like a lot to juggle, you’re not wrong. But there are four ALM pillars that actually help untangle this mess: source, build, test, and deploy. Each has its quirks in Power Platform, but together, they put structure back into what otherwise feels like chaos.

The Core ALM System: Source, Build, Test, Deploy

Every time someone asks for a Power Platform pipeline that “just works,” the first thing that comes to mind are those four dusty ALM pillars—source, build, test, deploy. In traditional dev land, you could almost run these in your sleep. Power Platform doesn’t let you off that easy. Suddenly, “source” doesn’t mean code; it means wrangling with solution files, exporting zipped bundles packed with apps, flows, and sometimes connectors pretending to be part of the source.Let’s start with the basics, then pick apart what makes each pillar a little strange in this world. You’ve got your solution zip file. It’s not code in the usual sense—open it up, and you’re greeted with XML and JSON explainer files, not the logic you’d spot in TypeScript or C#. But this is the “source” for Power Platform. Teams often get tripped up here. It looks portable until you realize most changes—like updating a formula in a screen or tweaking a flow’s logic—aren’t obvious until you export a fresh solution zip. So yes, you can version these solution files in Git, but unless you’re disciplined about exporting after every relevant edit, your history has glaring gaps.Now comes configuration. For regular apps, you might store connection strings in a config file and call it a day. Power Platform smuggles environment-specific data inside these solutions. It’s not just environment variables—it’s connector references, dynamic endpoints, roles, and permissions bundled alongside the business logic. If you miss updating these before exporting from dev or test, you’re locking in pointers to the wrong place. I’ve watched teams religiously commit their solution zip files, only to deploy and realize half their app is still talking to the dev Dataverse instead of prod, because nobody re-mapped connector references.Then we have build. The word “build” usually brings up images of compiling code and watching green ticks in a CI job. With Power Platform, the build process is about stashing those zip bundles, double-checking schema, and verifying dependencies. In a GitHub Actions pipeline, a build job grabs your committed solution file, then uses Microsoft’s Power Platform CLI to unpack, validate, and repack the solution. The validation step is where the magic—or chaos—happens. It might catch broken references or unsupported actions. Worse, if a change in your flow relies on a custom connector that never made it into source control, your build silently packages something incomplete.Here’s where things get tricky. Those “build” jobs are less about compiling and more about orchestrating a reliable export and making sure what’s inside is shippable. Teams who skip this or rush it quickly land in situations where a build technically “succeeds,” but what ends up in UAT or prod is missing half its intended features. Microsoft keeps pushing the message that “solution files make ALM portable,” but there’s always a footnote: portability depends on connectors being properly mapped and roles set up across environments.Testing is the next sore spot. In a web app pipeline, automated tests might run unit tests or UI checks. For Power Platform, what counts as a “test” is up for debate. Sometimes it’s solution validation—in other words, does the solution open, and do key dependencies resolve? Other times, it’s running test flows or checking that connectors respond in a test environment. A lot of times, testing is manual, because validating business logic inside a canvas app isn’t wired into automated pipelines yet. But you can automate checks to spot missing connectors, validate critical flows, or even ping a test environment just to prove credentials are working.Deployment drives the pain home. When a standard app deploys, you might just push a web artifact onto Azure. With Power Platform, you have to map connections, assign permissions, update secrets, swap environment variables, and finally trigger an import using a service principal—never a regular account, otherwise you’re stuck with audits and permission denials. GitHub Actions can tie this process together, but only if each job knows which environment to target and which secrets to use. I’ve seen teams try to shortcut this by using a single set of credentials across dev and prod, which is a recipe for chaos—data leaks, permission errors, and broken features that only show up in one environment.Take a real-world team I worked with a few months back. They did most things right: standard Git repos, versioned solution zips, and clear branching. But their pipeline always broke at deployment. Why? They never bothered to swap environment variables or update connector references before importing into prod. That left key flows pointing to the wrong endpoints, with data trickling into the wrong systems. The fix? Scripted steps in their GitHub pipeline that swapped variables and remapped every connector on import.Microsoft’s own guidance is blunt about this: use solution files for moving things between environments, but always handle connector references and role mapping as part of your pipeline. They point out that skipping these steps is the number one way to break apps, especially as environments get more locked down.Once you treat source, build, test, and deploy as connected moving parts—not just isolated steps—it’s easier to see why so many ALM attempts fall over, and how you can actually troubleshoot issues instead of crossing your fingers. Next, let’s get into how GitHub Actions coordinates this dance—triggers, branching, and job separation that keep your Power Platform automation both flexible and secure.

GitHub Actions: Connecting the Dots with Triggers and Jobs

If you’ve waited for a GitHub Actions pipeline to finish after updating your Power App, you already know: automation doesn’t mean instant, and it definitely doesn’t mean magic. Most teams hit that wall right after the excitement of seeing their first workflow run. You set up a script, wire it into your repository, and expect every new commit to roll out cleanly across all environments. Then, reality checks in. Instead of just kicking off a script, GitHub Actions uses a logic chain built around triggers, jobs, and handoffs between environments. That order and structure is what keeps deployments from becoming a tangle of failed steps and strange errors.The starting point in this system is the trigger. Most first-timers create a workflow that fires on every push, or whenever a pull request lands in the main branch. On the surface, that looks like best practice—why not run your pipeline every time work changes? Here’s the catch: when you only set a trigger on main, everything gets funneled through a single track. What if changes need to hit dev, but not test or prod? What if you’re ready to push to prod, but test is still running validation? Teams that stick to one-size-fits-all triggers tend to run into problems where features meant for development environments sneak into production, or test deployments suddenly overwrite prod settings. You can branch workflows for each environment—dev, test, and prod—and set up very specific triggers for each. For example, set up a workflow that only runs on pushes to a “dev” branch, or fire a different workflow when someone merges into “release/prod.” This approach gives more control and creates a clear fence between work-in-progress and live changes. But a lot of teams never revisit their triggers after creating them. That’s how secrets from one environment can slip into another, and config meant for dev ends up in prod by accident.One team I worked with tried to run their entire ALM process from a single workflow. For a while, it seemed fine, until someone noticed that a production database connection string showed up in the dev environment. They didn’t realize their secrets were being shared between jobs, and once code moved between environments, those secrets leaked with it. It wasn’t an obvious crash or error—it was silent. This kind of data spill is more common than you’d think, especially if you don’t set up your workflows to separate secrets and environment variables.Each job in a GitHub Actions workflow handles a clear piece of the process. One job might handle exporting the solution from a source environment. Another job takes care of validation—unpacking, scanning through solution metadata, checking all the dependencies, and making sure connector references exist. Next comes the job that prepares the environment-specific variables, translating the solution so it fits its target environment. The last job triggers the import process, using service principals to write changes into the right environment.You might think of environment variables and secrets as basic placeholders, but in practice, they’re the glue holding everything together. Connection strings, API keys, shared passwords—they all need to be swapped between jobs, but they can never leak across boundaries. In Power Platform ALM, you may have a different Dataverse connection for each environment, or need to swap endpoints depending on which flow or Power App you’re targeting. If you reuse variables or hardcode secrets, you end up with either a brittle pipeline or, worse, significant security risks. The import process depends on pulling secrets only from the right “vault,” so production isn’t exposed by test or dev mishaps.A good mental model for secrets in GitHub Actions is to picture each environment as having its own digital vault: a locked box only the right jobs can see. GitHub gives you precise controls—you can scope secrets to environments so a job running in dev can’t access prod credentials, and vice versa. Set up these vaults, and even if someone tweaking the pipeline tries something risky, environment protection rules prevent a misstep from crossing over. Microsoft’s and GitHub’s own documentation both hammer this point. They recommend environment protection rules and strict secret scoping to stop cross-environment leaks before they even start.Without that kind of protection, even the best-designed ALM workflows fall apart. Imagine a workflow deploying to prod while still holding onto a dev connection string, or a test environment suddenly with access to prod data. It doesn’t always break things visibly; sometimes it just means compliance flags go off, or logs fill up with subtle errors that slowly pile into bigger problems. This is why branching workflows and scoping secrets aren’t just advanced topics—they’re the safety net that keeps your automation from quietly unraveling.There’s also value in splitting workflows not just by environment, but by responsibility. Code that exports and validates solutions shouldn’t even know how to deploy or swap secrets. If every job handles one piece of the puzzle, it becomes much easier to spot where things break, roll back, and audit changes after the fact. Secrets stay in their vaults, jobs follow clearly scoped permissions, and pipeline failures point straight to the piece that needs fixing.Understanding how GitHub Actions hands off work from one job to another, while keeping secrets tightly scoped and triggers clearly defined, is what takes ALM from a hopeful experiment to a reliable, secure, and predictable practice. This workflow segmentation isn’t just about matching best practices; it’s what blocks quiet security leaks and accidental overwrites, and it cuts off a whole class of invisible errors before they start to haunt your environments.Now, with the pieces working together through properly scoped automation, it’s time we tackle another friction point: connectors, service principals, and the small but critical pitfalls that can stall your deployments right as you get confident.

Secrets, Service Principals, and the Trouble with Connectors

If you’ve ever tried moving a Power App or Flow from dev to prod, you know the story: things work flawlessly in one environment, then as soon as you switch over, everything grinds to a halt or gives you those mysterious connection errors. It isn’t unique to a specific team—every Power Platform admin eventually runs into this wall. The root of the problem lies in how connectors and secrets behave behind the scenes. You can’t just export a solution zip from development and expect it to work somewhere else, because the wires it depends on change with every environment.Take connector references for starters. In Power Platform, a connector is never just a static bit of information bundled into your app. It’s dynamic, mutable, and often unique per environment. When you export your solution, connector references act almost like bookmarks—they point to connection objects that only exist in the environment they were built in. So when you import into test or prod, your fancy HTTP endpoint or Dataverse link doesn’t necessarily get recreated the same way—or at all. This is where teams get caught out. If dev is using a connector to test data and that exact configuration isn’t mirrored in prod, your flow or app either fails silently or hangs on the first attempt to connect.A lot of folks try to get around this by sharing user credentials across environments. They figure: if you use the same account or password that created the connection in dev, maybe it’ll just work in prod. The reality is, this invites a host of problems—everything from permission denials to compliance audit triggers. Microsoft’s own guidance is clear: using individual user credentials for automated deployments just isn’t viable or secure. It puts your automation at the mercy of password resets and can leave a muddy trail in your audit logs. There’s no clear accountability, and eventually, permissions block the pipeline when the original user is flagged, removed, or loses access.Enter service principals. If you haven’t worked with them, picture a service principal as a digital extra set of hands, purpose-built for automation. Unlike a regular user, a service principal is tied to an app registration in Azure AD, not a real person. You give it the minimum permissions needed to run your deployment tasks. That sounds simple, but the payoff is big: instead of relying on a user who could leave the organization or change roles, deployments stay consistent, auditable, and traceable. Every environment gets access only to the right connections and resources, and any changes are logged under this “robot account.” When something fails, you have a clean audit trail, and you don’t end up with orphaned flows nobody can fix.On the problem of mapping connectors, consider what happens when environments drift apart. Maybe the dev team got approval for a new custom connector—let’s say it hits a sandbox API. When it comes time to move to prod, that connector isn’t just missing, it may not even be allowed by organizational data policies. If you forget to remap, the app calls out to the wrong endpoint or breaks entirely. I saw this land hard at a healthcare group recently: one missed connector mapping triggered the use of test data in production and nearly created a compliance incident. Their automated pipeline exported everything as planned, but the connector reference inside the solution still pointed back to an old test database. That wasn’t flagged by Power Platform until real data started flowing through the wrong pipes.Environment variables step in to ease some of this pain. Unlike hardcoding endpoints or API keys, environment variables let you separate what *changes* across environments from what stays the same. You can swap out endpoints, API keys, or other secrets with each deployment, without rewriting your app or flow. For example, a flow that works with a dev Dataverse table can be reconfigured on import to use the prod table, simply by changing the environment variable inside your deployment process. GitHub Actions pipelines make this practical—each job can inject the right value at the right time.But even here, discipline is key. Forget to update a variable, or let secrets leak across environments, and suddenly your workflow is exposed. Microsoft emphasizes the need to scope secrets carefully and map connector references intentionally. Their DLP (Data Loss Prevention) policies exist for a reason—to keep sensitive information from wandering between environments or surfacing in the wrong place. If you try to bypass these by sharing variables or connections, you’ll trip over corporate security or compliance controls. More importantly, you lose predictability, because there’s no longer a line between what’s meant for dev versus test or prod.The benefit, when you get all of this right, is hard to overstate. Service principals combined with well-managed environment variables and properly mapped connectors transform your pipeline. Your deployments become predictable. When things break, you have a clear chain of custody and know exactly where to look—was it a connector mapping, a variable, or a missed permission? Auditors can follow changes, and there’s no suspense when someone leaves the organization or changes passwords.It all comes down to handling secrets, connectors, and service principals like they matter—because in Power Platform ALM, they do. Miss a step here, and hours of manual patching follow. Get them right, and your pipeline finally works the way it should: resilient, secure, and transparent. When things *do* go wrong, the troubleshooting process doesn’t start from square one—you already have the evidence of what moved, who moved it, and how. And that brings us to the last piece: what to do when ALM still throws a wrench in the works, and how to unstick even the most tangled deployments.

Conclusion

If you’ve built Power Platform solutions for a while, you know ALM isn’t just about copying files and hoping for the best. Each piece—the solution files, connectors, service principals, and environment variables—serves a real purpose, and understanding how they interact is what lets you avoid costly surprises down the line. When Microsoft moves the goalposts or another connector changes, the teams that adapt the fastest are the ones who actually know why each step matters and don’t just tick boxes. Want to share something that broke (spectacularly or quietly) in your deployment? Drop it below, and we’ll dig into it together.

Get full access to M365 Show - Microsoft 365 Digital Workplace Daily at m365.show/subscribe