Fabric Dataflows Gen2: The Future of ETL in Microsoft Fabric

- Author

- Mirko Peters - M365 Specialist

- Published

- Sun 17 Aug 2025

- Episode Link

- https://m365.show/p/fabric-dataflows-gen2-the-future

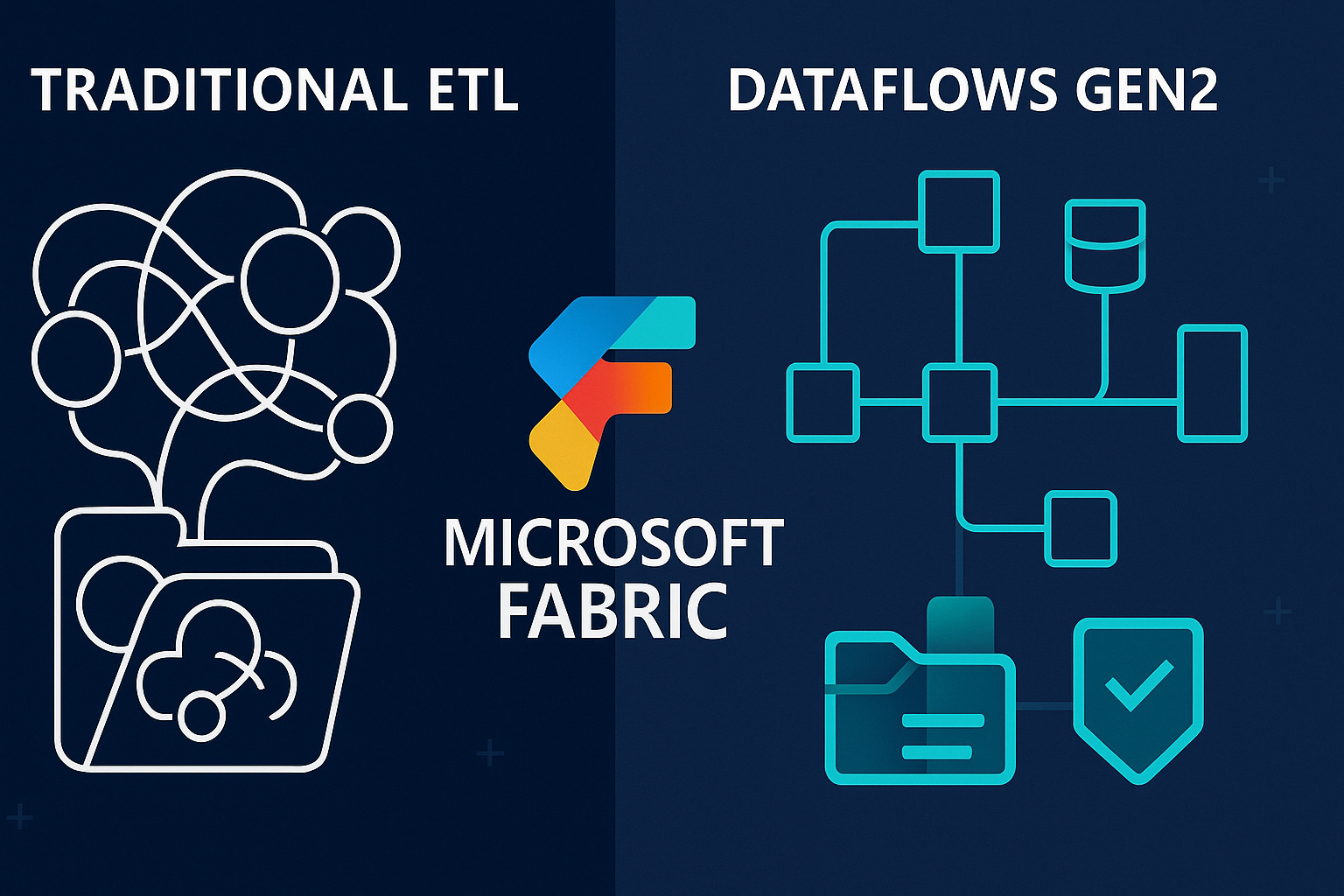

If you’ve spent hours duct-taping together Power Query scripts and manually tweaking ETL jobs, Microsoft just changed the game. Fabric Dataflows Gen2 isn’t just a facelift — it’s a complete rethink of how your data moves from source to insight.In the next few minutes, you’ll see why features like compute separation and managed staging could mean the end of your most frustrating bottlenecks. But before we break it down, there’s one Gen2 upgrade you’ll wonder how you ever lived without… and it’s hidden in plain sight.

From ETL Pain Points to a Unified Fabric

Everyone’s fought with ETL tools that feel like they were built for another era — ones where jobs ran overnight because nobody expected data to refresh in minutes, and where error logs told you almost nothing. Now imagine the whole thing redesigned, not just re-skinned, with every moving part built to actually work together. That’s the gap Microsoft decided to close. For a long time in the Microsoft data stack, ETL lived in these disconnected pockets. You might have a Power Query script inside Power BI, a pipeline in Data Factory running on its own schedule, and maybe another transformation process tied to a SQL Warehouse. Each of those used its own refresh logic, its own data store, and sometimes its own credentials. You’d fix a transformation in one place only to realize another pipeline was still using the old logic months later. And if you wanted to run the same flow in two environments — good luck keeping them aligned without a manual checklist. Even seasoned teams ran into a wall trying to scale that mess. Adding more data sources meant adding more pipeline templates and more dependency chains. If one step broke, it could hold up a whole set of dashboards across departments. So the workaround became building parallel processes. One team in Sales would pull their copy of the data to make sure a report landed on time, while Operations would run their own load from the same source to feed monthly planning. The result? Two big jobs pulling the same data, transforming it in slightly different ways, then arguing over why the numbers didn’t match. It wasn’t just the constant duplication. Maintaining those fragile flows ate an enormous amount of development time. Internal reviews from data teams often found that anywhere from a third to half of their ETL hours in a given month went to patching, rerunning, or reworking jobs — not building anything new. Every schema change upstream forced edits across multiple tools. Version tracking was minimal. And because the processes were segmented, troubleshooting could mean jumping between five or six different environments before finding the root cause. Meanwhile, Microsoft kept expanding its platform. Power BI became the default analytics layer. The Fabric Lakehouse emerged as a unified storage and processing foundation. Warehouses provided structured, governed tables for enterprise systems. But the ETL story hadn’t caught up — the connective tissue between these layers still involved manual exports, linked table hacks, or brittle API scripts. As more customers adopted multiple Fabric components, the lack of a unified ETL model started to block adoption of the ecosystem as a whole. That’s the context where Gen2 lands. Not as another “new feature” tab, but as a fundamental shift in how ETL is designed to operate inside Fabric. Instead of each tool owning its own isolated dataflow logic, Gen2 positions dataflows as a core Fabric resource — on par with your Lakehouse, Warehouse, and semantic models. It treats transformation steps as assets that can move freely between these environments without losing fidelity or needing a custom bridge. This matters because it changes what “maintainable” actually looks like. In Gen1, the same transformation might exist three times — in Power Query for BI, in a pipeline feeding the warehouse, and in a notebook prepping the Lakehouse. In Gen2, it exists once, and every connected service points to that canonical definition. If an upstream column changes, there’s one place to update the logic, and every dependent service sees it immediately. That’s the kind of alignment that stops shadow processes from creeping in. And because this overhaul involves more than just integration points, it sets the stage for other benefits. The architecture itself has been realigned to make scaling independent of storage — something we’ll get into next. The authoring experience has been rebuilt with shared governance in mind. And deployment pipelines aren’t an afterthought; they’re baked into the lifecycle from the start. So the real takeaway here is that Gen2 is a first-class Fabric citizen. It’s built into the platform’s identity, not sitting on the sidelines as an add-on service. Instead of stitching together three or four tools every time you move data from source to insight, the pieces are already part of the same framework. Which is why, when we step into the architectural blueprint of Gen2, you’ll see how those connections hold up under real-world scale.

The Core Architecture Blueprint

You can’t see them in the interface, but under the hood, Dataflows Gen2 runs on a very different set of building blocks than Gen1 — and those changes shift how you think about performance, flexibility, and long-term upkeep. On the surface it still looks like you’re authoring a transformation script. Underneath, there’s a compute engine that runs independently from where your data lives, a managed staging layer for temporary steps, a broader set of connectors, an authoring environment that’s built for collaborative work, and deep hooks into Fabric’s deployment pipeline framework. Each part has a job, and the way they fit together is what makes Gen2 behave so differently in practice. In Gen1, compute and storage were tightly coupled. If you needed to scale up your transformation performance, you were often also scaling the backend storage — whether you needed to or not. That meant more cost and more risk when resources spiked. Troubleshooting was equally frustrating. One team might hit refresh on a high-volume dataflow right as another job was processing, and suddenly the whole thing would slow to a crawl or fail outright. You’d get partial loads, half-finished temp tables, and spending a day debugging only to find it was a resource contention problem all along. Think about a nightly refresh window where a job pulls in millions of rows from multiple APIs, applies transformations, and stages them for Power BI. In the old setup, if external API latency increased and the job took longer, it could collide with the next scheduled flow and both would fail. You couldn’t isolate and scale the transformation power without dragging storage usage — and costs — along for the ride. Gen2’s compute separation removes that choke point. The transformation logic executes in a dedicated compute layer, which you can scale up or down based on workload size, without touching the underlying storage. If you have a month-end process that temporarily needs high throughput, you give it more compute just for that run. Your data lake footprint stays constant. When the run finishes, compute spins down, and costs stop accruing. This not only solves the collision issue, it also makes performance tuning a more precise exercise instead of a blunt-force resource increase. The other silent workhorse is managed staging. Every transformation flow has intermediate steps — partial joins, pivot tables, type casts — that don’t need to live on disk forever. In Gen1, these often landed in semi-permanent storage until someone cleaned them up manually, taking up space and creating silent costs. Managed staging in Gen2 is an invisible workspace where those steps happen. Temp data exists only as long as the process needs it, then it’s automatically purged. You never have to script cleanup jobs or worry about zombie tables eating storage over time. Here’s a real-world example: imagine a retail analytics pipeline that ingests point-of-sale data, cleans it, joins with product metadata, aggregates by region, and outputs both a detailed table and a summary for dashboards. In Gen1, each of those joins and aggregates would either slow the single-threaded process or produce intermediate files you’d later have to delete. In Gen2, the compute engine processes those joins in parallel where possible, using managed staging to hold temporary results just long enough to feed the next transformation. By the time the flow finishes, only your output tables exist in storage. That’s faster processing, no manual cleanup, and predictable storage usage every time it runs. None of these features live in isolation. The connectors feed the compute engine; the managed staging is part of that execution cycle; the authoring environment ties into deployment pipelines so you can push a tested dataflow into production without manual rework. The separation of responsibilities means you can swap or upgrade individual parts without rewriting everything. It also means if something fails, you know which module to investigate, instead of sifting through one giant, monolithic process for clues. This modular architecture is the real unlock. It’s what takes ETL from something you tiptoe around to something you can design and evolve with confidence. Scaling stops being a guessing game. Maintenance stops being a fire drill. And because every part is built to integrate with the rest of Fabric, the same architecture that powers your transformations is ready to hand off data cleanly to the rest of the ecosystem — which is exactly where we’re going next.

Seamless Flow Across Fabric

Imagine building a single dataflow and having it immediately feed your Lakehouse, Warehouse, and Power BI dashboards without exporting, importing, or writing a single line of “glue” logic. That’s not a stretch goal in Gen2 — that’s the baseline. The days of creating one version for analytics, another for storage, and a third for machine learning jobs are over if you’re working inside Fabric’s native environment. Before Gen2, moving the same data across those layers meant juggling several disconnected steps. You might start inside Power Query for initial transformations, then export to a CSV or push it into Azure Data Lake. From there, you’d set up a separate load into your warehouse, often through a Data Factory pipeline. Once in the warehouse, you’d manage linked tables for reporting, manually configure refresh schedules, and hope none of it fell out of sync. Every extra system in the chain was another opportunity for drift — schema mismatches, refreshes failing on one side, or logic updates making it into one version but not the others. That fragmentation came with real consequences. Say you’ve got a revenue dataset coming in from multiple regions. In Power BI, the model owner might apply logic to reclassify certain products under new categories. Meanwhile, in the warehouse, a separate SQL job groups them differently for operational reporting. On paper, both look correct — they just don’t agree. So the finance dashboard shows one number, the inventory planning report shows another, and you spend a week reconciling a problem that didn’t exist at the source. The fix often meant rewriting transformations in multiple tools to get both sides aligned, which never felt like a good use of anyone’s time. Gen2 addresses this head-on. Connectors aren’t just wider in coverage, they’re built as Fabric-native pathways. When you publish a dataflow in Gen2, every service in your Fabric workspace can read from it directly — Lakehouse, Warehouse, Power BI — with no intermediate scripts or table mapping. The transformation logic isn’t copied into each destination; it’s referenced from the same definition. That means if you change the classification rule for those products once in the dataflow, every connected workload starts using it automatically. Refresh schedules are unified because they’re based on a single source of truth, not chained triggers between unrelated jobs. Picture a sales analytics team asked to provide both quarterly executive dashboards and a dataset for data scientists training churn prediction models. In the old setup, they’d build the Power BI layer first, then extract and clean a separate dataset for the ML team. In Gen2, they author the ETL once. The summary tables feed directly into Power BI, while the cleaned transactional data is available to the Lakehouse for machine learning. There’s no “ML extract” to maintain. When the sales categorization changes, both the dashboards and the model training data reflect it on the next refresh. That single definition model extends to collaboration. Gen2’s version control integration means analysts and developers can work off the same dataflow repository. A developer can branch the pipeline to test a new transformation without freezing the production flow. Analysts can continue iterating on visualizations knowing the main ETL stays stable. When the change is approved, it’s merged back into the main branch, and everyone gets the update. No more passing around copies of queries or waiting for one person to “finish” before another can start. Because these pipelines are Fabric-native, they also tie into newer features like Fabric streaming. That means parts of your dataflow can handle real-time ingestion — think live IoT sensor data or transaction streams — and feed them directly into the same data model that batch jobs use. Your Lakehouse, Warehouse, and dashboards stay in sync whether the data arrived five seconds ago or as part of a nightly run. The result is that reusable ETL in Fabric stops being an aspirational idea. You really can maintain one transformation path and have it reliably feed every downstream service without data being reshaped or reinterpreted along the way. That’s not just good for consistency; it’s an operational advantage. And when you clear out all those manual handoffs, you also free up headroom to manage the resources behind it more intelligently — which Gen2’s architecture is about to make a lot easier.

Resource Control Without the Guesswork

What if you could scale ETL performance exactly when you need it, without touching your queries — and the meter only runs while it’s in use? That’s the shift Gen2 brings. You aren’t locked into one fixed level of resources that’s either too much most of the time or not enough when heavy jobs hit. In Gen1, resource scaling was more like a blunt instrument than a dial. You picked your capacity tier, paid for it all month, and hoped it could handle peak workloads. If a job failed because there wasn’t enough power during a spike, the only option was to provision more — often permanently — just to cover those outlier events. And when you did overprovision to avoid those failures, the extra cost sat on the books every day, whether or not you used the capacity. Technical teams saw it as insurance; finance teams saw it as waste. That tension played out in every reporting cycle. Underprovision, and you’d miss refresh deadlines or spend hours rerunning failed jobs. Overprovision, and you were essentially buying idle horsepower “just in case.” The lack of transparency didn’t help either. It was hard to predict how much resource a new dataflow would actually consume until it ran in production. By then, you were already in damage control mode. Gen2 changes the equation with compute separation and on-demand scaling. Processing runs in its own environment, completely separate from storage, so you can scale compute up or down without touching your data footprint. Need a short-term boost for a big job? You set the compute to a higher level for that run. When the work finishes, it scales back down, and you’re no longer paying for resources you don’t need. The rest of the time, you can run with a lower baseline that still meets day-to-day needs. Take a common example — a quarterly data load. Imagine an ETL pipeline that usually processes a few million rows per night. At quarter’s end, that balloons to hundreds of millions due to consolidated reporting and deeper historical pulls. In Gen1, that spike could crush your existing capacity, forcing you to either accept failures or pay for higher performance all the time. In Gen2, you can tell the system to allocate a bigger compute tier for just that quarterly run. It chews through the backlog quickly, then returns to the standard level. There’s no architectural change, no query rewrites, and no long-term capacity increase hanging over your budget. Managed staging plays its part in cost control too. Because Gen2 clears out temporary data automatically at the end of a run, you’re not paying for storage that only exists to hold intermediate calculations. In Gen1, those temp layers often lingered — either forgotten or saved “just in case” — slowly inflating your storage bills. Now, temp data lives only as long as it needs to for the transformation to complete, then it’s gone. You still get the performance benefit of staging without the hidden, accumulating cost. This approach to elasticity isn’t just convenient — it’s financially impactful. Analysts who’ve studied elastic compute in ETL environments consistently find significant savings when workloads can be matched to resources dynamically. It’s the difference between running your car at full throttle all day because you might need to overtake someone versus pressing the accelerator only when you actually do. Costs track with actual usage rather than some inflated worst-case estimate. The predictability this creates is a rare win for both sides of the house. Technical teams can design flows knowing they have a safety net for heavier-than-normal workloads without ballooning everyday operating costs. Finance teams get usage patterns that line up with value delivered — no more paying for 100% capacity to handle the 10% of the time when you really need it. And because the scaling is configuration-driven rather than code-driven, there’s no development debt every time you change it. The net effect is a platform where performance tuning and cost management aren’t at odds. You can aim for faster refreshes in specific scenarios while keeping a leaner footprint the rest of the time. That control — without the constant guesswork — sets the stage for something equally important: making ETL a true team effort instead of a one-person-at-a-time process.

Collaborating Without Collisions

ETL has always worked fine when one person owns the process. The trouble starts when two people open the same file, make their own changes, and hit save. One set of transformations quietly replaces the other, and you only find out later when reports start returning different numbers. For years, teams have avoided this by handing off files like relay batons, or by splitting work into separate, semi-duplicated flows. It kept collisions down, but it also meant slower development and more places for bugs to hide. The common pattern in most Microsoft-based ETL setups went something like this: an analyst would handle cleaning and shaping data in Power Query for a BI model. Meanwhile, a developer might add steps to prepare a warehouse table. Both would work from slightly different copies of the same logic, often exported and imported manually. Weeks later, someone would notice that one version had a new filter or field, and the other didn’t. You could try to merge them, but there was no native system tracking those changes, so you were guessing which version to trust. In traditional environments, version control for ETL was mostly an afterthought. Some teams tried manual documentation — naming conventions and change logs in Excel. Others bolted on third-party Git syncs that exported M code from Power Query. Those solutions sort of worked if you were careful, but they weren’t built into the ETL process. That meant it was easy to skip them in the name of speed, and once you bypassed the process, the audit trail was gone. Gen2 changes that by baking Git integration directly into the dataflow layer. It’s not an export step or an optional plugin. The dataflow itself can live in a Git repo as the source of truth. You can create branches, commit changes, and merge updates just like you would in a software development project. The difference is you’re working with transformation logic instead of application code. That opens up development patterns that just weren’t possible before. Say your production dataflow is feeding daily executive dashboards. You need to test a new transformation that changes how regional sales are grouped. In Gen1, you’d either risk altering the live flow or have to clone the whole thing into a separate workspace for testing. In Gen2, you open a development branch of the same dataflow. Your changes run in isolation, leaving production untouched. You can see the results, run checks, and only merge when you’re confident it’s correct. Because it’s Git, branching is only part of the story. Code reviews and pull requests now apply to ETL. If you work in a team, a second pair of eyes can review your transformation logic before it hits production. That’s more than just formatting — someone can see exactly which filters, joins, or calculated columns you’ve added or removed in a commit. It makes it easier to catch logic errors or unintended field drops before they cause wider issues. Every change is also tracked historically. If a schema change upstream breaks part of the flow and you’re not sure when it happened, you can scroll back through commits to find the last version that worked. Rolling back isn’t a matter of retyping steps from memory — it’s checking out the prior commit. That’s a level of control most ETL teams haven’t had inside the Microsoft ecosystem without heavy customization. For engineering teams that have already adopted this workflow, it’s done more than prevent accidental overwrites. Controlled deployment flows mean fewer unplanned outages, cleaner audit trails for governance, and the ability to push updates on their own schedule instead of rushing fixes into live workflows. It also reduces the reliance on single “data heroes” who know exactly which version of a flow is correct, because the repo’s history makes that visible to everyone. The end result is that ETL work in Gen2 feels much less like a fragile, one-person task and much more like a modern software project. You can have multiple contributors, safe experimentation, peer review, and a full history — all built into the platform. Which ties back to the bigger picture we’ve been building: every design choice in Gen2, from architecture to integration to governance, shifts ETL from a collection of workarounds to a connected, maintainable part of the Fabric ecosystem.

Conclusion

Gen2 isn’t just about faster refresh times or cleaner staging. It changes the way data teams actually work. Instead of patching together tools, you’re building workflows that live in one place, evolve together, and stay consistent across every part of Fabric. If you want to see that difference, try building a single Gen2 dataflow, hook it to your Lakehouse and Power BI, and watch how much maintenance disappears. In a few years, we may look back at pre‑Gen2 ETL the same way we remember dial‑up internet — it worked, but the idea of going back won’t even cross your mind.

Get full access to M365 Show - Microsoft 365 Digital Workplace Daily at m365.show/subscribe