“On closed-door safety research” by richbc

- Author

- LessWrong ([email protected])

- Published

- Tue 19 Aug 2025

- Episode Link

- https://www.lesswrong.com/posts/2TA7HqBYdhLdJBcZz/on-closed-door-safety-research

Epistemic status: I think labs keep some safety research internal-only, though the motivations for this are sometimes unclear. This post is an exploration into the possible incentives and dynamics at play, which I have tried to gain information about from reliable sources. With that said, I'm posting to start a discussion rather than to make definitive claims.

Executive Summary

- Multiple sources point to frontier labs keeping at least some safety research internal-only.

- Additionally, I think frontier labs may be incentivised to keep at least some important safety research proprietary, which I refer to as safety hoarding.

- Theories for why labs may safety-hoard include: the labs want to hedge against stronger future AI legislation or an Overton window shift in public safety concerns, which would create a competitive advantage for compliant labs; reputational gains from being "the safe lab"; safety breakthroughs being too expensive to implement, meaning it's favourable [...]

---

Outline:

(00:32) Executive Summary

(02:46) Introduction

(04:02) Hoarding Incentives

(07:23) Neutral Explanations for Unpublished Work

(08:31) In Favour of Selective Withholding

(10:00) Consequences of Safety Hoarding

(10:04) Negative

(11:32) Possibly Positive

(12:19) The Current State

(13:05) OpenAI

(15:25) Google DeepMind

(16:27) Anthropic

(17:14) A Move Towards Openness

(19:30) Grey Areas

(20:14) Precedents and Parallels

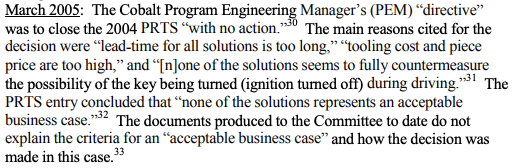

(20:35) Case Study: AV Safety Hoarding

(23:45) Other Automotive Examples

(25:40) Conclusion

(27:02) Future Work

(27:36) Acknowledgements

The original text contained 6 footnotes which were omitted from this narration.

---

First published:

August 13th, 2025

Source:

https://www.lesswrong.com/posts/2TA7HqBYdhLdJBcZz/on-closed-door-safety-research

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.