“The Practical Value of Flawed Models: A Response to titotal’s AI 2027 Critique” by Michelle_Ma

- Author

- EA Forum Team ([email protected])

- Published

- Thu 26 Jun 2025

- Episode Link

- https://forum.effectivealtruism.org/posts/fKx6DkWfzJXoycWhE/the-practical-value-of-flawed-models-a-response-to-titotal-s

Crossposted from my Substack.

@titotal recently posted an in-depth critique of AI 2027. I'm a fan of his work, and this post was, as expected, phenomenal*.

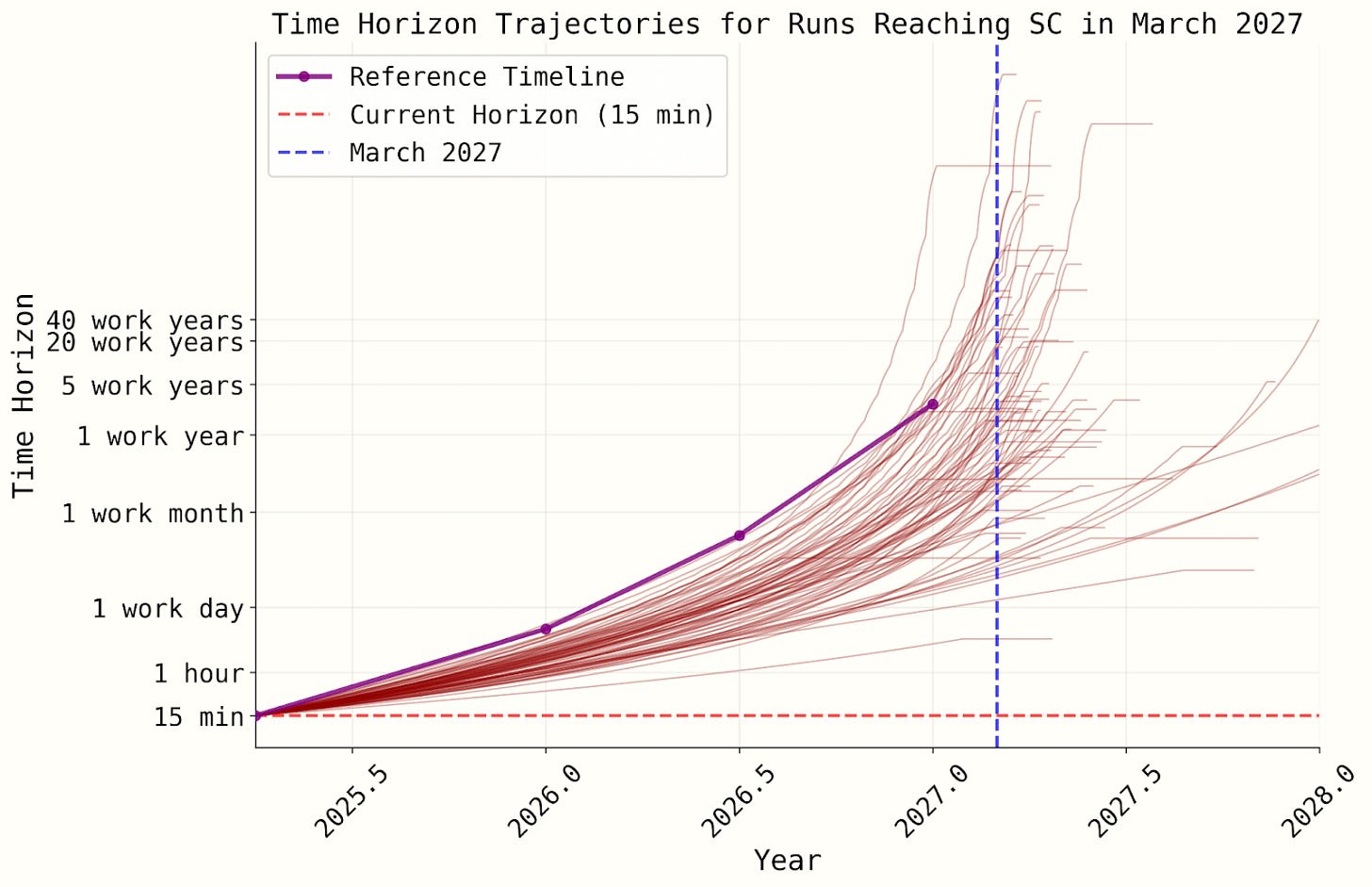

Much of the critique targets the unjustified weirdness of the superexponential time horizon growth curve that underpins the AI 2027 forecast. During my own quick excursion into the Timelines simulation code, I set the probability of superexponential growth to ~0 because, yeah, it seemed pretty sus. But I didn’t catch (or write about) the full extent of its weirdness, nor did I identify a bunch of other issues titotal outlines in detail. For example:

- The AI 2027 authors assign ~40% probability to a “superexponential” time horizon growth curve that shoots to infinity in a few years, regardless of your starting point.

- The RE-Bench logistic curve (major part of their second methodology) is never actually used during the simulation. As a result [...]

---

Outline:

(02:50) Robust Planning: The People Doing Nothing

(06:06) Inaction is a Bet Too

(07:21) The Difficulty of Robust Planning in Governance

(08:52) Corner Solutions & Why Flawed Models Still Matter

(10:15) Conclusion: Forecasting's Catch-22

---

First published:

June 25th, 2025

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.