“Ten AI safety projects I’d like people to work on” by JulianHazell

- Author

- EA Forum Team ([email protected])

- Published

- Fri 25 Jul 2025

- Episode Link

- https://forum.effectivealtruism.org/posts/D6ECZQMFcskpiGP7Q/ten-ai-safety-proj

The Forum Team crossposted this with permission from Secret Third Thing. The author may not see comments.

Listicles are hacky, I know. But in any case, here are ten AI safety projects I'm pretty into.

I’ve said it before, and I’ll say it again: I think there's a real chance that AI systems capable of causing a catastrophe (including to the point of causing human extinction) are developed in the next decade. This is why I spend my days making grants to talented people working on projects that could reduce catastrophic risks from transformative AI.

I don't have a spreadsheet where I can plug in grant details and get an estimate of basis points of catastrophic risk reduction (and if I did, I wouldn't trust the results). But over the last two years working in this role, I’ve at least developed some Intuitions™ about promising projects that I’d like [...]

---

Outline:

(01:09) 1. AI security field-building

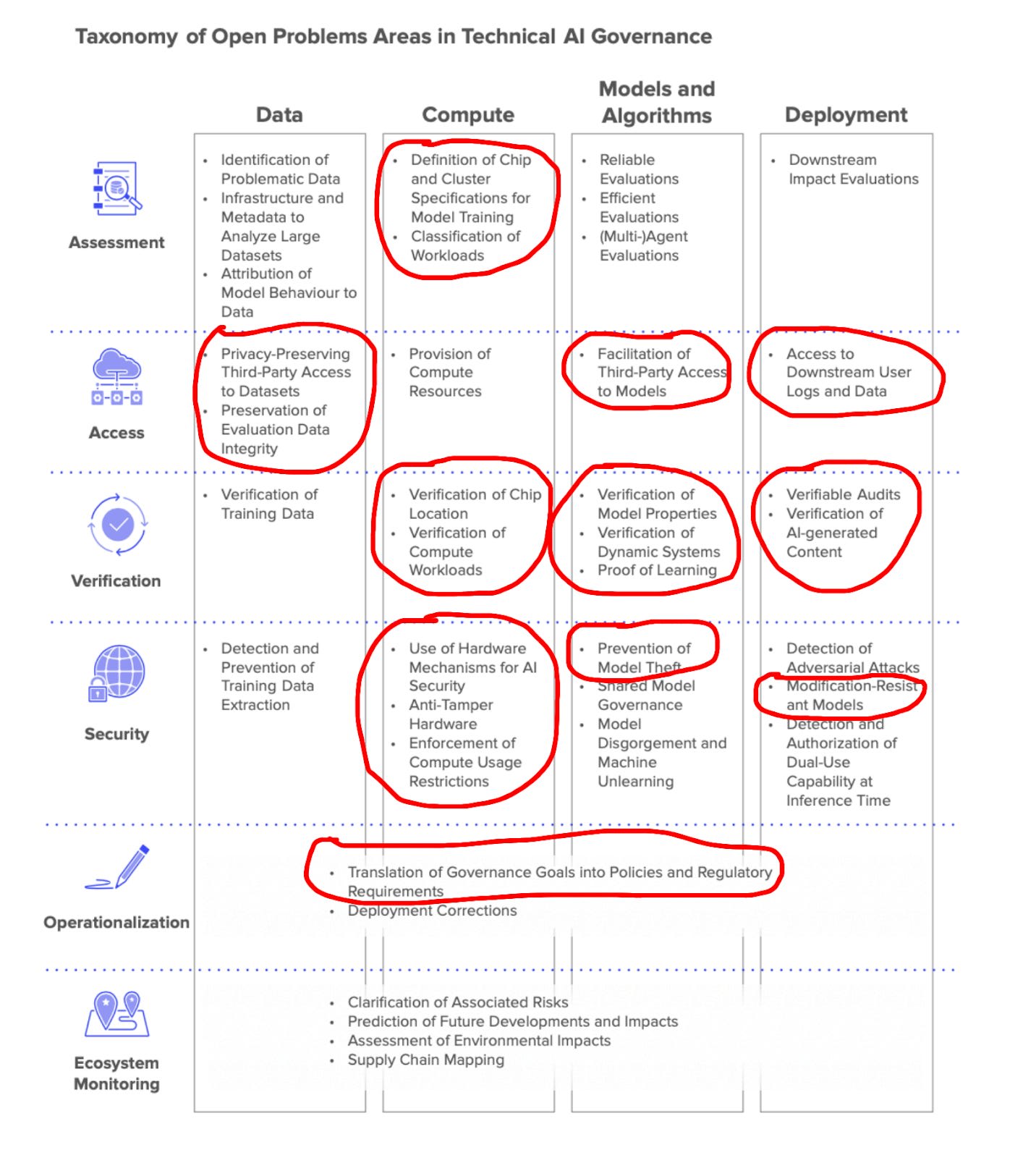

(02:37) 2. Technical AI governance research organization

(04:30) 3. Tracking sketchy AI agent behaviour in the wild

(06:39) 4. AI safety communications consultancy

(08:25) 5. AI lab monitor

(10:50) 6. AI safety living literature reviews

(12:36) 7. $10 billion AI resilience plan

(14:00) 8. AI tools for fact-checking

(15:35) 9. AI auditors

(17:20) 10. AI economic impacts tracker

(19:25) Caveats, hedges, clarifications

---

First published:

July 24th, 2025

Source:

https://forum.effectivealtruism.org/posts/D6ECZQMFcskpiGP7Q/ten-ai-safety-proj

---

Narrated by TYPE III AUDIO.

---

Images from the article:

Apple Podcasts and Spotify do not show images in the episode description. Try Pocket Casts, or another podcast app.